✅P346_k8s-集群搭建-集群安装完成

部署k8s-master

master 节点初始化

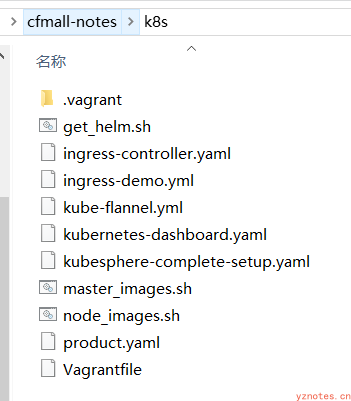

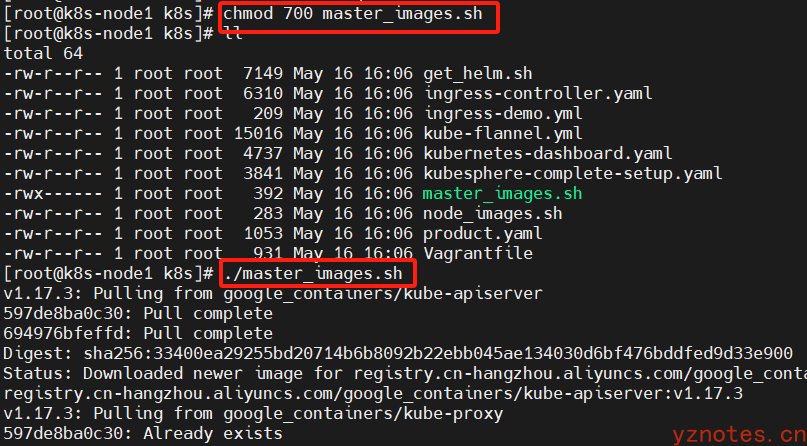

在Master节点上,创建并执行master_images.sh拉取相应镜像 ,具体做法就是将k8s文档移动到Master节点虚拟机中,执行授权命令chmod 777 master_images.sh、利用./master_images.sh启动脚本

master_images.sh 脚本内容如下:

#!/bin/bash

images=(

kube-apiserver:v1.17.3

kube-proxy:v1.17.3

kube-controller-manager:v1.17.3

kube-scheduler:v1.17.3

coredns:1.6.5

etcd:3.4.3-0

pause:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

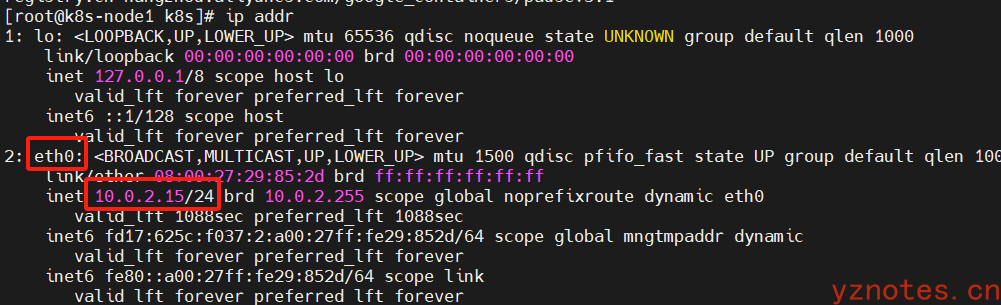

查看默认网卡内部通信端口号

注意:前面提到过,Docker版本19.03.13和kubernetes-version-v1.17.3是可以兼容的,下面命令才会执行成功:

kubeadm init \

--apiserver-advertise-address=10.0.2.15 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.17.3 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

注:

apiserver-advertise-address=10.0.2.15:这里的IP地址是master主机的地址,为上面的eth0网卡的ip地址,它指定了 API 服务器对外公开的 IP 地址或主机名service-cidr=10.96.0.0/16:定义了 Kubernetes 服务(Service)的 IP 地址范围pod-network-cidr:Pod 间通信,确保每个 Pod 都能获得一个唯一的 IP 地址

当Docker版本为26.1.3,kubernetes-version-v1.17.3时,版本不兼容,执行结果报错如下:

[root@k8s-node1 k8s]# kubeadm init --apiserver-advertise-address=10.0.2.15 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.17.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16 W0516 16:30:11.116306 8955 validation.go:28] Cannot validate kube-proxy config - no validator is available W0516 16:30:11.116351 8955 validation.go:28] Cannot validate kubelet config - no validator is available [init] Using Kubernetes version: v1.17.3 [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 26.1.3. Latest validated version: 19.03 error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists [ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists [ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists [ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists [ERROR Port-10250]: Port 10250 is in use [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` To see the stack trace of this error execute with --v=5 or higher

正常执行初始化命令后的结果:

[root@k8s-node1 ~]# kubeadm init \

> --apiserver-advertise-address=10.0.2.15 \

> --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

> --kubernetes-version v1.17.3 \

> --service-cidr=10.96.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

W1205 16:27:16.474893 1854 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1205 16:27:16.474934 1854 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.3

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-node1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.2.15]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-node1 localhost] and IPs [10.0.2.15 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-node1 localhost] and IPs [10.0.2.15 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W1205 16:27:20.881469 1854 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W1205 16:27:20.882082 1854 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 41.504079 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-node1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-node1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: puga0v.g031etuhh85u1dyf

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.2.15:6443 --token puga0v.g031etuhh85u1dyf \

--discovery-token-ca-cert-hash sha256:67346c939b8f7088a7fa848da3fc5024d64013931fd4ccb15462685c1f4f9294

由于默认拉取镜像地址 k8s.gcr.io 国内无法访问,这里指定阿里云镜像仓库地址。可以手动按照我们的 images.sh 先拉取镜像,

地址变为 registry.aliyuncs.com/google_containers 也可以。

科普:无类别域间路由(Classless Inter-Domain Routing、CIDR)是一个用于给用户分配 IP地址以及在互联网上有效地路由 IP 数据包的对 IP 地址进行归类的方法。拉取可能失败,需要下载镜像。

运行完成提前复制:加入集群的令牌

测试 kubectl(主节点执行)

根据上面执行kubeadm init命令输出的内容信息,执行如下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

目前 master 状态为 notready。等待网络加入完成即可。

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady master 98s v1.17.3

# 查看 kubelet 日志

journalctl -u kubelet

这条命令是上述初始化命令执行后,最底部的输出结果。作用是给master节点加入一个节点,后续执行

kubeadm join 10.0.2.15:6443 --token puga0v.g031etuhh85u1dyf \

--discovery-token-ca-cert-hash sha256:67346c939b8f7088a7fa848da3fc5024d64013931fd4ccb15462685c1f4f9294

安装 Pod 网络插件(CNI)

安装命令

在master节点执行以下命令(不用这个):

$ kubectl apply -f \

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

以上地址可能被墙,可以直接获取本地已经下载的k8s文件中的flannel.yml运行即可,如下:

[root@k8s-node1 k8s]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

同时flannel.yml中指定的images访问不到可以去docker hub找一个wget yml地址

- vi 修改 yml 所有 amd64 的地址都修改了即可

- 等待大约 3 分钟

查看指定名称空间的 pods:kubectl get pods -n kube-system

查看所有名称空间的 pods:kubectl get pods --all-namespaces

如果网络出现问题,关闭 cni0,重启虚拟机继续测试:$ ip link set cni0 down

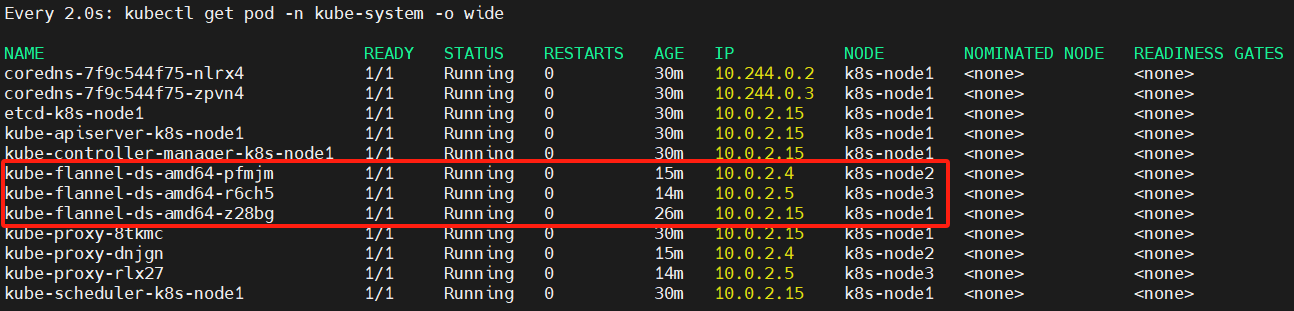

监控 pod 进度: watch kubectl get pod -n kube-system -o wide

等 3-10 分钟,完全都是 running 以后继续!

查看命名空间

[root@k8s-node1 k8s]# kubectl get ns

NAME STATUS AGE

default Active 58m

kube-node-lease Active 58m

kube-public Active 58m

kube-system Active 58m

[root@k8s-node1 k8s]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f9c544f75-4pzb5 0/1 Pending 0 3m57s

kube-system coredns-7f9c544f75-qjsjt 0/1 Pending 0 3m57s

kube-system etcd-k8s-node1 1/1 Running 2 3m53s

kube-system kube-apiserver-k8s-node1 1/1 Running 2 3m53s

kube-system kube-controller-manager-k8s-node1 1/1 Running 2 3m53s

kube-system kube-flannel-ds-amd64-8gg8j 1/1 Running 0 88s

kube-system kube-proxy-bqvtv 1/1 Running 0 3m58s

kube-system kube-scheduler-k8s-node1 1/1 Running 2 3m53s

查看master节点信息

注意:STATUS为ready才能够执行后续的命令

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady master 59m v1.17.3

加入节点

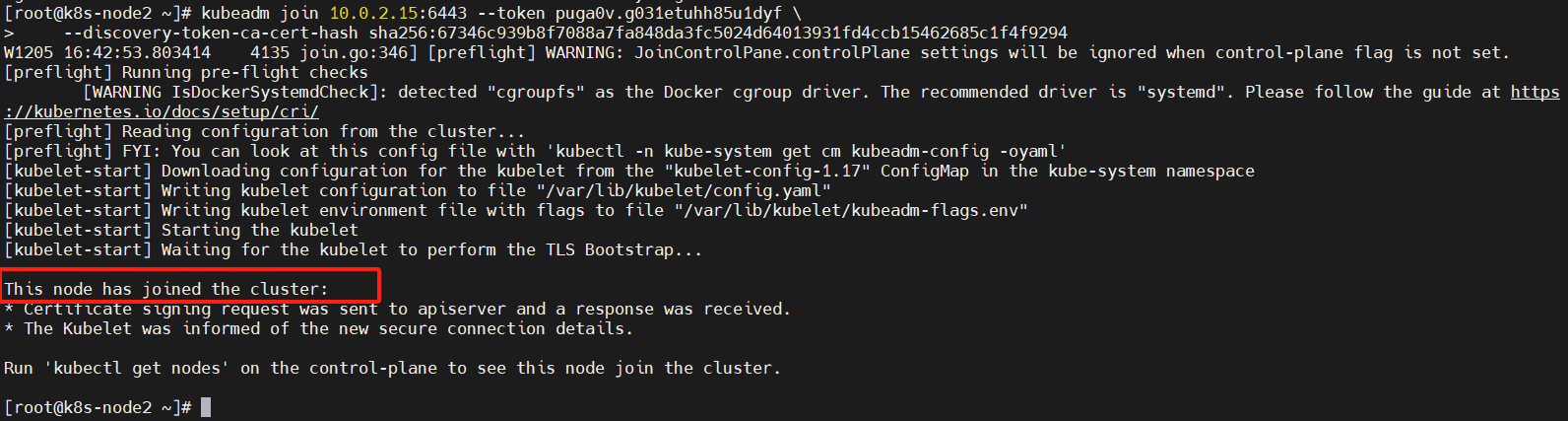

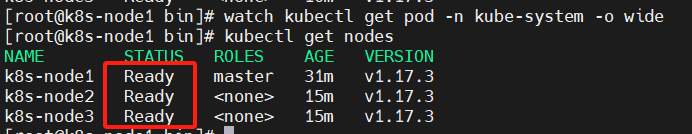

将“k8s-node2”和“k8s-node3”节点加入master,执行以下命令:

kubeadm join 10.0.2.15:6443 --token zyxc3x.cs01s8bqbk7m7g6m --discovery-token-ca-cert-hash sha256:bdf732faf1c44ff3afa0937583d857b9c43ff357bb6a63772eecf410a53a4703

可以看到 k8s-node2 节点已经成功加入了集群

查看节点

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 115m v1.17.3

k8s-node2 NotReady <none> 40m v1.17.3

k8s-node3 NotReady <none> 15m v1.17.3

注意:k8s-node2、k8s-node3刚开始为NotReady状态是因为flannel插件没有初始化完成,待其初始化完毕,就会变成Ready状态,注意三个节点都需要在 /opt/cni/bin 目录下防止CNI Plugins插件,下载版本见《踩坑章节---coredns状态一直为ContainerCreating》

监控 pod 进度命令:

watch kubectl get pod -n kube-system -o wide

加入Kubernetes Node

在 Node 节点执行,向集群添加新节点,执行在 kubeadm init 输出的 kubeadm join 命令

token 过期怎么办?

kubeadm token create --print-join-command

kubeadm token create --ttl 0 --print-join-command

kubeadm join --token y1eyw5.ylg568kvohfdsfco --discovery-token-ca-cert-hash sha256:6c35e4f73f72afd89bf1c8c303ee55677d2cdb1342d67bb23c852aba2efc7c73

监控 pod 进度

watch kubectl get pod -n kube-system -o wide

等 3-10 分钟,完全都是 running 以后使用 kubectl get nodes 检查状态

踩坑

master初始化报错

执行如下命令报错:

kubeadm init \

--apiserver-advertise-address=10.0.2.15 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.17.3 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

解决方法:使用kubeadm reset命令重置,并重新初始化 kubeadm

kubeadm reset

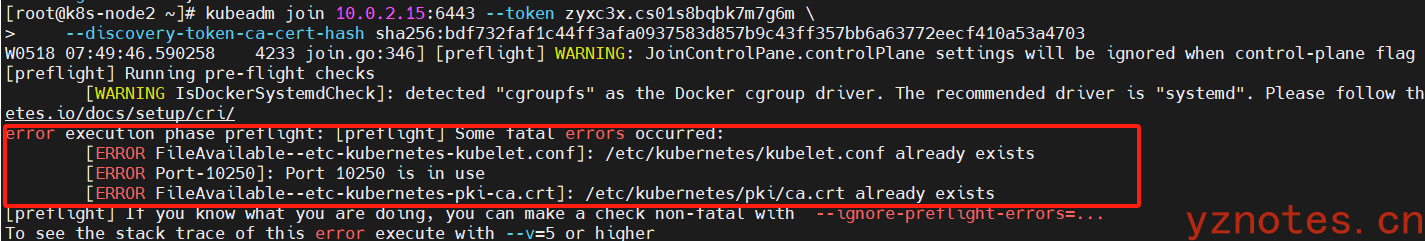

端口10250被占用,文件已经存在

- /etc/kubernetes/kubelet.conf 文件已经存在 —— 这通常意味着这个节点之前可能已经被添加到了另一个 Kubernetes 集群中,或者尝试加入集群的操作被中断,留下了配置文件,

删除即可。 - /etc/kubernetes/bootstrap-kubelet.conf —— 文件也已经存在。这个文件是节点在初始连接集群时用于自举的配置文件。

- 端口 10250 正在被使用 —— 在 Kubernetes 中,这个端口通常被 kubelet 使用来与 API 服务器通信。如果它已经被其他进程占用,kubelet 将无法启动。

删除/var/lib/kubelet/*和/etc/kubernetes即可。 - /etc/kubernetes/pki/ca.crt —— 文件已经存在。这表示可能有一个现有的 Kubernetes 集群的证书和密钥信息,

删除即可。

解决方法:

查找并停止占用10250端口的进程

[root@k8s-node2 system]# netstat -ntlup|grep 10250

tcp6 0 0 :::10250 :::* LISTEN 2887/kubelet

[root@k8s-node2 system]# kill -9 2887

删除已经存在的文件

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/kubelet.conf

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/kubelet.conf

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/pki/ca.crt

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/kubelet.conf

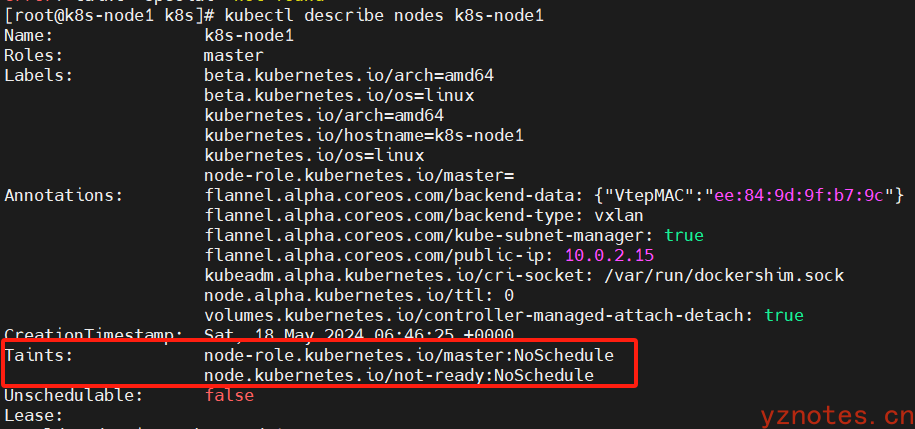

Pod 一直处于 Pending 状态

导致 Pod 一直处于 Pending 状态的几种情形:

- 检查节点是否资源不足;

- 检查 Node 是否存在 Pod 没有容忍的污点;

- 检查是否存在低版本 kube-scheduler 的 bug

- 等等

我所发生的原因是因为Node 存在 Pod 没有容忍的污点,具体解决过程如下:

执行kubectl describe node <node-name>命令查看 Node 已设置污点。命令如下:

[root@k8s-node1 k8s]# kubectl describe nodes k8s-node1

执行结果:

将k8s-node1 污点机制去掉,执行如下命令:

[root@k8s-node1 k8s]# kubectl taint nodes k8s-node1 node-role.kubernetes.io/master-

[root@k8s-node1 k8s]# kubectl taint nodes k8s-node1 node.kubernetes.io/not-ready-

k8s-node1节点去掉污点机制后,等待一段时间,发现pod节点状态为running了,日志也正常输出。

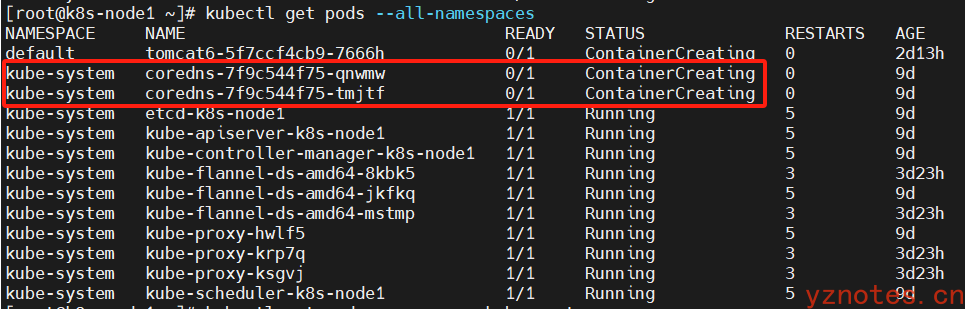

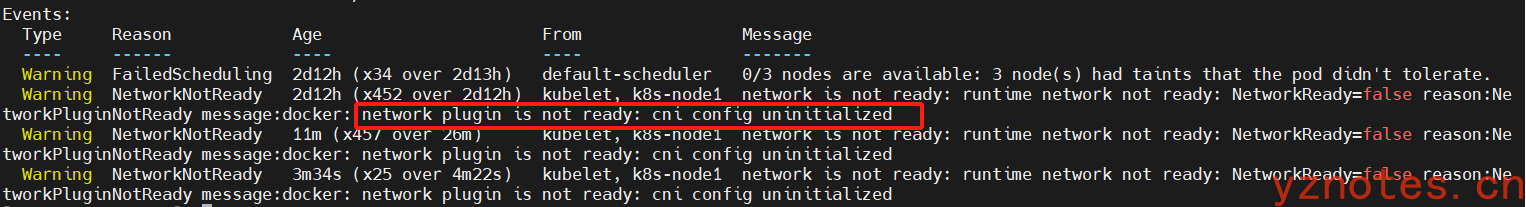

coredns状态一直为ContainerCreating

利用 kubectl describe pod <pod-name> --namespace=kube-system 命令排查,如下:

[root@k8s-node1 ~]# kubectl describe pod coredns-7f9c544f75-qnwmw --namespace=kube-system

执行结果:

报错:k8s-node1节点没有安装相应的cni模块

解决:k8s-node1、k8s-node2、k8s-node3三个节点都需要加入flannel的插件

sudo mkdir -p /opt/cni/bin

cd /opt/cni/bin

#下载相应的压缩包(在1.0.0版本后CNI Plugins中没有flannel):cni-plugins-linux-amd64-v0.8.6.tgz

https://github.com/containernetworking/plugins/releases/tag/v0.8.6

#将其解压在/opt/cni/bin下就可以

tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz

kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg